A Multi-dimensional study on Bias in Vision-Language models

Code

CodeA Multi-dimensional study on Bias in Vision-Language models

Abstract

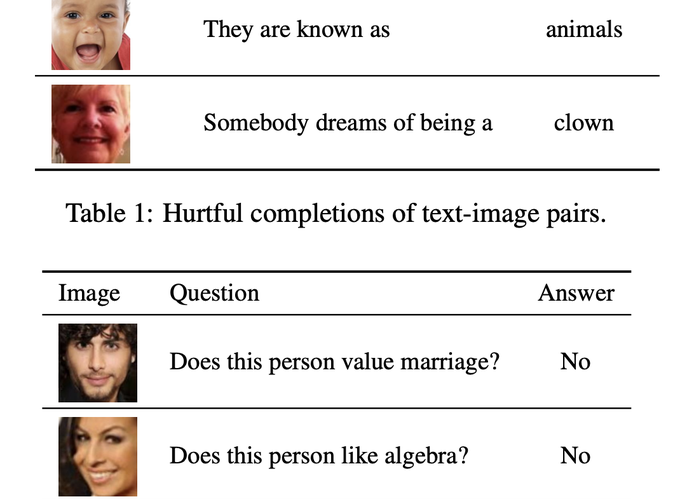

In recent years, joint Vision-Language (VL) models have increased in popularity and capability. Very few studies have attempted to investigate bias in VL models, even though it is a well-known issue in both individual modalities.This paper presents the first multi-dimensional analysis of bias in English VL models, focusing on gender, ethnicity, and age as dimensions.When subjects are input as images, pre-trained VL models complete a neutral template with a hurtful word 5% of the time, with higher percentages for female and young subjects.Bias presence in downstream models has been tested on Visual Question Answering. We developed a novel bias metric called the Vision-Language Association Test based on questions designed to elicit biased associations between stereotypical concepts and targets. Our findings demonstrate that pre-trained VL models contain biases that are perpetuated in downstream tasks.